(Editor’s note: this post was originally published in the Spanish version of Security Art Work on 4th July 2019)

Today we publish the second of three articles courtesy of Jorge Garcia on the importance of server bastioning. You can find the first one here: The importance of server hardening – I

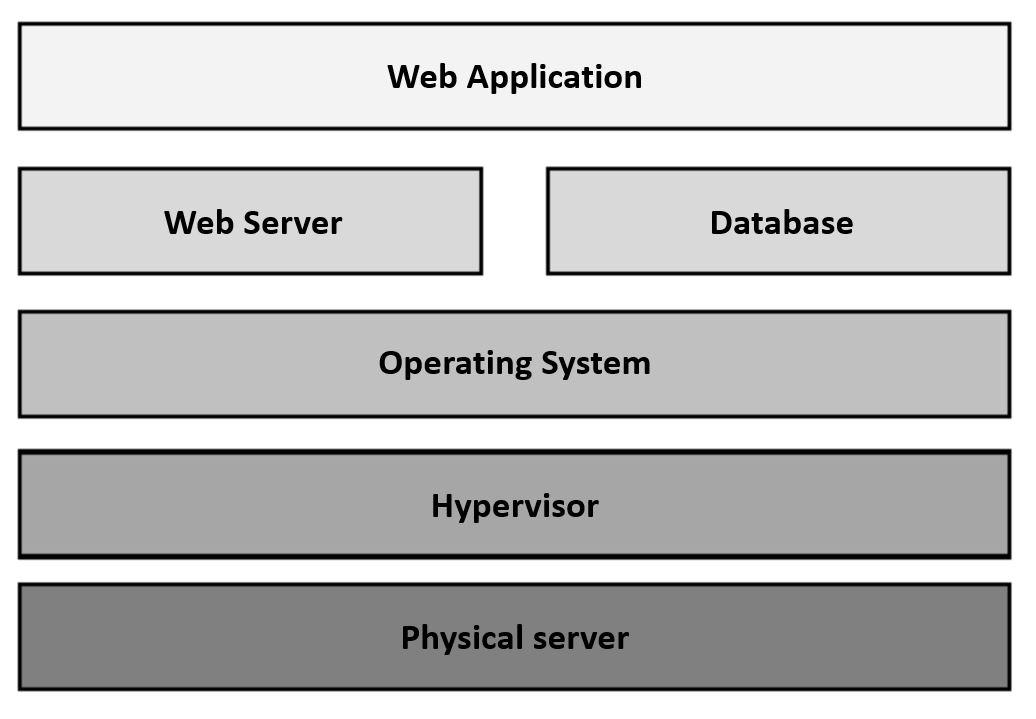

All right, we have the mission of hosting an online commerce web application and offering it to the world on a server that we own. Our goal is to make it as impregnable as possible at all levels. Since it is a web application, it is foreseeable that the main attack entry vector is through vulnerabilities of the application itself. Really, let’s not fool ourselves, all CMS are sure candidates for severe vulnerabilities. The scheme of how the platform will be organized is the usual one in a virtual server:

Therefore, the issue is to choose a CMS with these premises:

- That it is actively developed and supported by a large community of developers or by a large company. This ensures that when a vulnerability is published, it is quickly corrected.

- That the installed CMS is the last available version of a branch that has support, and that it is expected to continue having it for quite some time. Do not forget that, since we do not have a development environment at home, updates or migrations mean a loss of service which in turn means potential loss of money.

- That it is compatible with the operating system of the server that we have. A consideration that is obvious but important.

- May the history of critical vulnerabilities be as low as possible. A CMS that is actively developed and has good support but that on average finds a critical vulnerability every week is not viable to maintain or safe to use.

As ultimately responsible for the security of the data hosted by the application, it is important to have all the necessary measures to ensure sufficient security of such data. For this reason, I consider it essential to have:

- Modern and updated operating system.

- Installation of the minimum amount of software required.

- Firewall software on the server itself.

- Process management with SELinux.

- Web application firewall.

- Apache web server.

- Web application updated to the latest version available.

- Suricata IDS to monitor traffic.

- HTTP traffic analysis using web visit analysis tool.

- Stay alert to cybersecurity news

Modern and updated operating system

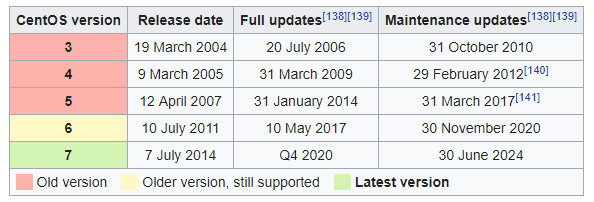

Let’s go point by point. The first thing is to have a modern and updated operating system. This is important because an unsupported operating system (for example: CentOS 5, Ubuntu 14.04 or Debian 6, to name a few) implies that, when a vulnerability is detected in some component of the system, the manufacturer will not be required to publish – and generally will not – security updates to remedy it, which will leave the system vulnerable. It is a matter of time before the system is compromised. In this case, the server runs CentOS 7 updated to the latest available version, which has security support until June 2024, which is a reasonable period of time before having to migrate to a more recent version.

Installation of the minimum amount of software required

An important note to highlight is that, the fewer software packages installed, the less attack surface we will have. Installing many packages and dependencies “in case they are needed in the future” or simply if we are not sure if they are needed or not, it greatly favors that the attacks can be successful.

For example, if we want to have a web server to serve an application, we will install only the web server package. If the application requires PHP or a similar language, the module or minimum libraries will be installed. It is very common to read tutorials on the Internet that install all Apache modules or PHP integrations with all database backendseven when the server has only one or sometimes no database configured. This is a bad practice that generally shows ignorance of the technical requirements of the application we want to deploy.

In this case, our server runs CentOS 7 with Apache and PHP –due to the requirements of my partner’s application -. An important observation is that many CMS require a specific version of PHP, which leads system administrators to deploy any random repository that provides that version or, even worse, install it from source. This is not a recommended practice. Although it is true that the PHP version of the official CentOS / Debian / Ubuntu repositories may be outdated in terms of version number, we will always have the assurance that, if a vulnerability appears, it will be corrected quickly since it is official repo.

If the CMS requires a specific version of PHP that is more modern than the one included in the official repositories, it must be deployed from a repository that transmits trust and guarantees, on the one hand, that we will have future updates and, on the other hand, that the software it provides is legitimate and unmodified. In the case of CentOS, we can take the repositories as trustworthy references:

- EPEL. Managed by the Fedora community. The most recommended

- Atomic. Administered by Atomicorp. Highly recommended

- Remi. Managed by Remi, one of the official PHP packers

- Webtatic. Managed by Andy Thompson. Provide signed GPG packages

An important factor in these repositories is that they often conflict with the packages provided by other repositories – including official ones. This occurs because the same package (e.g., PHP) is provided by several repositories at the same time with a different version numbers. This causes many administrators to add the repo, install the package and then disable the repo to prevent inconsistencies when updating the system. This practice is incorrect, since this behavior would leave the repo packages that we have installed without updates.

Needless to say, installing a package from source is considered a last resort and should not be done under any circumstances, unless we know beforehand that we will have mechanisms and resources to be able to recompile each future version and ensure that everything goes to work well (let’s not forget that scheduled stops lead to loss of service in our installation and that we have no development environment).

Firewall software on the server itself

A good practice on any server – accessible or not from the Internet – is to have a firewall software on the machine itself that guarantees that only the ports that the system administrator considers to be relevant for the performance of the service are accessible. For example, a web server will generally need to have connectivity to TCP ports 80 (HTTP) and TCP 443 (HTTPS). If the server is going to be accessed by SSH for administration (not from the Internet, please) then we will also need to have TCP port 22 (SSH) open.

And why is this port filtration necessary locally? Because if the server is infected with malware that raises a listening port, we will prevent that port from being accessible from another machine. That is, the malware could raise a listening port but the firewall would not allow access to it, which would greatly mitigate the spread of it.

Luckily, CentOS 7 carries the firewalld firewall as standard. We should only allow traffic from the desired ports. In our case, we enable port 80 – where the server will perform a fulminating redirection to the HTTPS port – and port 443.

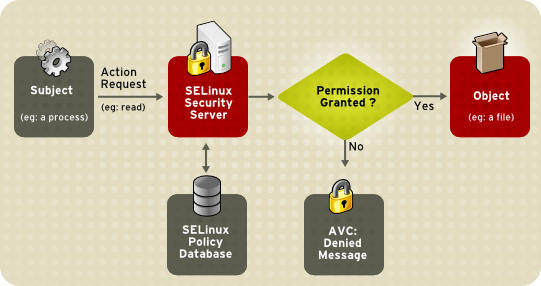

Process management with SELinux

Although this is not the time to talk about it, some attacks on web servers or the application itself involve the downloading of malware – usually in stages – into temporary directories by the infected server. Once the downloads are complete, the malware is run by the web service itself with the permissions it has.

One way to mitigate this fact in CentOS is by using SELinux. This tool allows restricting the behavior of a running service to those cases in which the service is assumed to operate. For example, for a web server it is considered normal to raise certain standard ports or to be able to serve content from /var/www, but generally it is not considered normal to send emails – except web forms – nor is it usual to access or delete filesystem files. Of course there may be applications where this is lawful, but we are talking about the general case. SELinux restricts those non-standard or suspicious actions that a process executes. Of course SELinux can be configured manually to allow some actions that have been blocked by default (e.g. sending of emails from a web form).

It is very common to read tutorials on the Internet about “How to deploy the X application in CentOS” and most cite “Disable SELinux” as one of their first steps “to avoid problems”. Problems are avoided by setting it up! Disabling SELinux is a very inadvisable practice..

Firewall web application

Athough only TCP 80 and TCP 443 ports are open in the computer’s firewall and even if we have SELinux configured to avoid anomalous behaviors, the web application will always be susceptible to attacks such as SQL injection, XSS, reuse of credentials, DoS, etc. These types of attacks are never blocked in the computer’s firewall – which only blocks traffic from or to ports other than those mentioned – nor by SELinux, since many of these attacks are based on the usual operation of the application (usually taking advantage of some known vulnerability).

For this reason there are web application firewalls. This type of firewall inspects web traffic to and from the application for non-standard or suspicious requests – for example, those that have an SQL statement in the URL itself, or that call a file upload resource in a CMS (upload.php or similar) -. In the case of CentOS, the most common tool for this purpose is Mod Security. It is an Apache module that, properly configured, protects us from many of these threats.

Mod Security’s default setting is quite restrictive and, as with an IDS, it also works on the basis of frequently updated signatures (usually through a repository). This allows you to detect web attacks that have only recently begun to be used. Unlike an IDS (in detection mode), Mod Security acts by blocking the requests it considers suspicious.

Fortunately, Mod Security includes some automatic settings to correct false positives in some standard applications such as WordPress or Owncloud (for example, if the application requires an HTTP method other than GET or POST, for example, DELETE or PUT – which is common in cloud storage applications).

An interesting aspect is that Mod Security correlates events by establishing a level of suspicion by IP origin based on the repetition and pattern of the analyzed requests. That is, even if low suspicion requests are presented, if many of the same type are presented in a certain time interval from the same IP, the firewall blocks the IP for security by returning requests 403 until the IP is unlocked (manually or automatically after a certain time). weather). Each source IP accumulates a level of suspicion that varies depending on the time based on the requests they make. If the level reaches a certain threshold, the source IP is blocked.

This ensures at all times that, even if an application is vulnerable, it will always be protected by the application firewall, which adds an important layer of security to the system.

In the case at hand, the CMS chosen had to be customized by hand, since there is no predefined template for Mod Security.

Apache web server

Every web application is served by a web server. In the case at hand, the web server I use is Apache because of its versatility, security and compatibility. Since it is one of the key components of the system, it is absolutely important that it be kept up to date. One of the many advantages that Apache provides is that it has an infinite number of modules that integrate it with other technologies, such as PHP, Mod Security, proxies, etc.

In addition to being updated, the web server must be properly configured to avoid exposing more information than strictly necessary and also reducing the attack surface, as well as mitigating possible vulnerabilities. A correct hardening of the Apache server must include:

- Do not reveal the Apache version or, better yet, deliberately display another version or technology in order to confuse the attacker. In my .case, I have modified the banner that shows the server to make the visitor believe that the server is running the Microsoft IIS system. It is incredible how many attacks the IDS detects trying to exploit vulnerabilities of that product. This automatically protects us from them, as they try to exploit vulnerabilities of an operating system and web server different from the one we are actually running. Security by obfuscation in every rule.

- Do not enable directory listing. This prevents access to the web directory files manually through the URL.

- Disable unnecessary modules that, by default, are active. It is important to know well which modules the application we want to serve needs.

- Disable the Follow Symlinks option. In this way we avoid that in the face of a vulnerability that allows creating files in the web directory, the attacker can create a symbolic link to system content (for example, to the /etc/passwd file).

- Limit the size of the HTTP request. This mitigates DoS-type attacks, since it prevents attackers from sending large, default-enabled requests.

- Disable browsing outside the web directory. This mitigates a huge number of attacks based on entering in the URL commands to level up the directory (for example /../../etc/passwd)

- Configure the HTTP X-FRAME-OPTIONS header so that only the page we serve can be contained within an iframe from the page we are serving (SAMEORIGIN). This mitigates clickjacking attacks, where the page we serve is embedded within an iframe of another fraudulent page that captures the information entered in the iframe.

- Restrict HTTP methods that are not useful for the applications we serve. By default Apache serves a multitude of HTTP methods that increase the attack surface. It is common to observe connection attempts using HTTP methods associated with VoIP SIP connections trying to exploit some known vulnerability. Therefore, if the application only needs, for example, HEAD, POST and GET, only those methods should be enabled.

- Enable Cross-site Scripting (XSS) protection. This type of attack generally requires the application to allow user interaction via input fields, such as forum comments. If the field entered by the user is not sanitized by the application, it can enter arbitrary code that will be executed each time that page is displayed (for example, the forum comment exemplified above).

- Disable TRACK/TRACE method. These methods are designed for situations where debugging is required and allows XSS attacks against the server, since the TRACE request returns the information requested.

- Run the Apache process from an unprivileged user. This is already mentioned in the SELinux section, when the malware executes code, it will do so with the privileges available to the web service. It is important that they be the minimum possible.

- Use TLS certificates exclusively, with redirection from port 80 to 443.

- Only allow TLS ciphers that are secure, robust and non-vulnerable.

Web application updated to the latest version available

This is a classic. In the end, the vast majority of vulnerabilities occur in the application, so it is expected that this is where we put the most effort into prevention.

The first thing to consider is whether we really need to deploy an additional application for the task we want to carry out. Often companies deploy many applications because they believe they improve the business or productivity of the company, but rarely stop to assess the cost of systems administration to keep the application updated. Many times you can introduce this functionality in another application that is already available – usually paying some opportunity cost – instead of deploying a new application. In the case at hand, since it is the only store I host, I have no choice but to deploy a dedicated application.

When planning the deployment of a new application it is important to choose a solution that is under active maintenance, that is, that in the event of a possible vulnerability, it will be patched as soon as possible. Generally a large community of developers and/or a large company that supports the product is usually a sufficient guarantee.

In the case at hand, when choosing Prestashop I took into account the extensive time it has been developing (the PrestaShop, SA company was founded in 2007, although the project dates from 2005). This means that the company has been developing the application in its different versions for 12 years, which presupposes agility in the resolution of incidents.

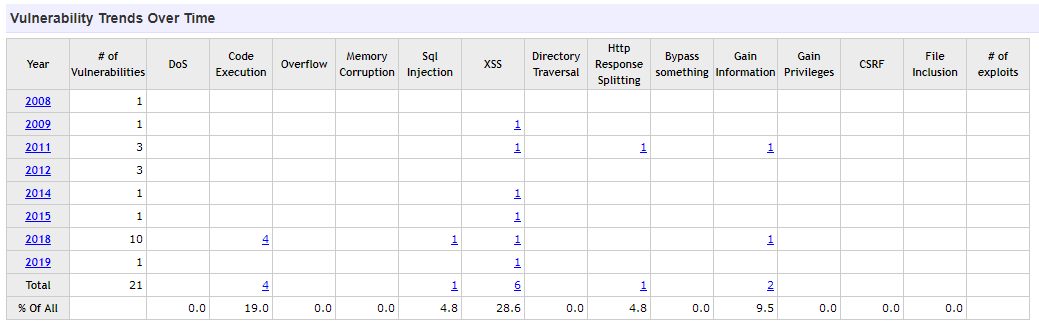

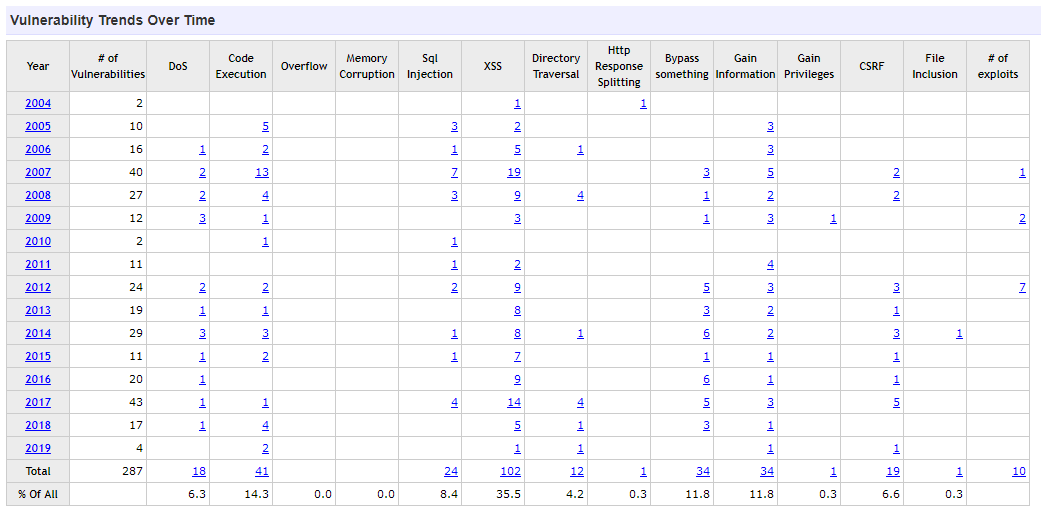

Another factor to consider is the history of vulnerabilities y and the frequency and criticality with which they appear. An application that is often updated but very often presents vulnerabilities requires a great effort by the system administrator to keep it updated.

Prestashop has 21 CVEs registered since 2008, half of them last year, with an average of 2.6 vulnerabilities per year:

PWe can see that it is a much lower ratio to other popular CMS such as WordPress, with 219 CVE since 2008, with an average of 19.1 per year:

PWe can see that it is a much lower ratio to other popular CMS such as WordPress, with 219 CVE since 2008, with an average of 19.1 per year:

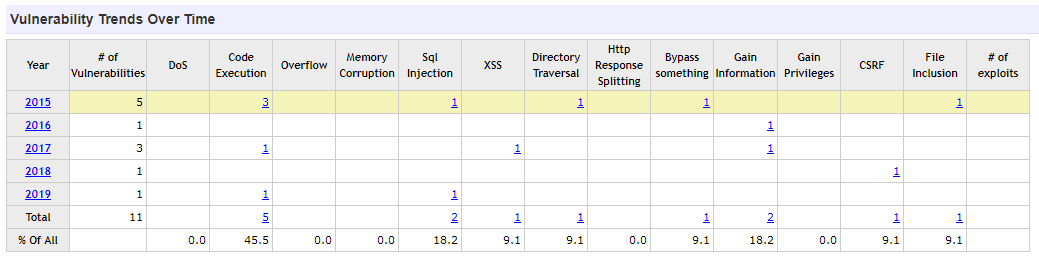

A solution that offers similar functionality to online commerce is Magento, a much younger and somewhat less mature application than Prestashop, with 11 CVE in 5 years, at an average of 2.2 vulnerabilities per year:

The choice of Prestashop over Magento was made considering as criteria the functionality of the modules, theme library and ease of administration, among other factors.

It is also important to assess the life span of each branch of the product. Some manufacturers invest a lot of resources by developing new versions of the product, which sometimes means that previous versions lose support quickly, forcing customers to be always updated to the latest branch. This often means that the customer has to invest additional time adapting the visual themes to the new branch or that some third-party plugins are not compatible, having to choose between assuming a loss of functionality or being vulnerable. This is not desirable, especially when there is no official branch update path and everything is based on making a copy and migrating the data by hand.

In the case of Prestashop, we observe that the last two branches published, 1.6 and 1.7, coexist with active maintenance and are in continuous development for quite some time:

- Branch 1.7. Version 1.7.0 published in November 2016. Current 1.7.5.2 May 2019.

- Branch 1.6. Version 1.6.0.5 published in March 2014.. Current 1.6.1.24 May 2019..

This implies that a customer with branch 1.6, even if he deployed it in 2014, would still have security support. This is a great addition.

All this leads us to monitoring. We have to have an agile way of finding out when a new version has come out in order to plan the update as soon as possible. The most devastating attacks can occur just when the vulnerability is published, especially if it is critical or easy to exploit, because it is easy to catch us off guard. Correct monitoring of the versioning of the application and the available versions is basic. The most common practice is to deploy a customized check of Nagios, Zabbix or the monitoring tool that is used to automatically check it and generate an event/alert to plan the update.

Suricata IDS to monitor traffic

Even if we have the system correctly hardened, an application updated to the latest version with support and we have additional aids such as SELinux or Mod Security, it is always convenient to have a method to identify possible attacks -successful or not – so that we can see at a glance the alerts of these incidents instead of diving through all the logs of the system in search of anomalies.

An excellent solution for this is to have an IDS in our network that analyzes all incoming and outgoing traffic from the server in search of possible attempts to exploit any vulnerability. Although there are many solutions on the market, the best known free open source solutions are Snort and Suricata. The first dates from 1998 while the second is much more recent, dating from 2010. Snort is currently developed by Cisco while Suricata is developed by the Open Information Security Foundation (OISF).

Suricata corrects some of Snort’s biggest limitations, mainly the support for processor multithreading, which is vital in networks with a lot of packet loads per second. Some – not all – Snort rule sets are compatible with Suricata, which makes it a beneficiary of the large number of rules developed by the Snort community, much more numerous than Suricata due to its much longer life span.

Valuing not only to protect the new Prestashop online store, but also the other portals and services I host, I considered some time ago that it was time to improve security by placing a Suricata IDS in the perimeter to analyze traffic from/to the DMZ where the servers are located and can have more information about possible attacks. It was an absolutely brilliant idea. Realizing the number and sophistication of our network’s exploitation attempts gives us realistic information about how active the cybersecurity environment is, although most of the time we don’t realize it.

In the case at hand, I took advantage of the fact that the network firewall I already had in use is Pfsense, which has an integrated Suricata module, and I configured it. In this way, the hardware resources are used, the attack surface is minimized and -for a home network- it usually provides a more than acceptable performance.

The next step is to configure the specific rule sets for the traffic we want to monitor – usually the rule sets corresponding to the web application, the malware and the server operating system. The more sets of rules we have active, the more time IDS will spend checking if each package matches any of them, so it is convenient to activate only those that provide useful information (here, less is more). Rules that make a lot of noise and do not provide important information generally distract the analyst from those that are relevant, so we have to reach an intermediate point between wanting to know everything, relevant or not, and accepting the remote – but not zero – possibility of missing an alert for false negative.

In the case at hand, given that this is a very low volume of traffic (a few hundred visits per day) and since I alone manage the infrastructure, I would rather miss an alert than having to process thousands of them that do not provide so much information, since I do not have the necessary time or resources.

Traffic analysis using a web analytics tool

This advice is from my own harvest and I have not seen it reflected anywhere else, but I consider it very relevant. We agree that with an IDS in progress and all the measures described above, the application has a very acceptable level of security, but there is a social engineering factor that we can still provide.

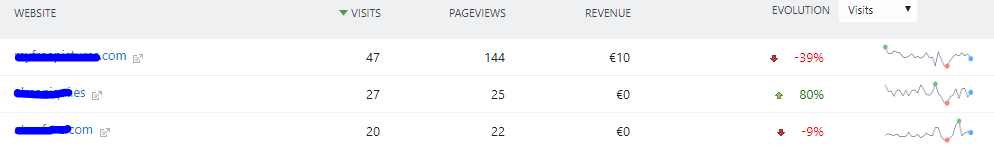

My grain of sand consists of analyzing the logs of the web server corresponding to the application – the store in this case – by means of some web statistics application like Google Analytics or – better still – some self-hosted solution like Matomo.

For those who do not know, Matomo “is like a Google Analytics but hosted in your infrastructure and without giving the data to anyone.” Matomo takes the logs of your application directly from the web server and processes them by providing very detailed visual information on the number of visits, the country and region of origin, operating system, browser and add-ons, time spent on the pages, etc. In addition to being enormously useful for getting to know your business audience better and knowing if advertising campaigns work as expected, it is also advantageous from the cybersecurity perspective by providing a brilliant tool to detect unusual peaks in the number of visits, certain user-agents that may be associated with malware, peak visits from some countries with active malware campaigns, and so on.

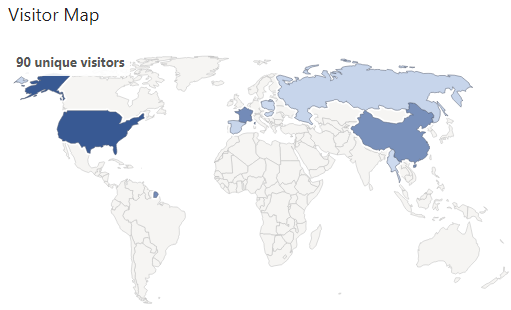

In short, it is another tool to find information when we encounter a possible incident. A simple view of the trend chart of visits can give us an idea of whether we are suffering a DDoS attack (if there is a very sudden increase in the number of visitors) or even alert us of a problem in a firewall rule that we have configured incorrectly (if there is a very sudden decrease in the number of visitors):

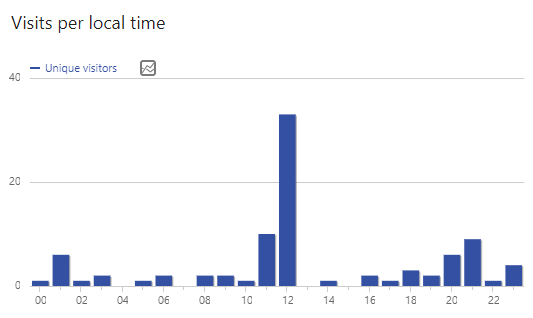

Even assessing the number of visits according to the time of day can make it easier to limit the incident temporarily if we observe any singularity:

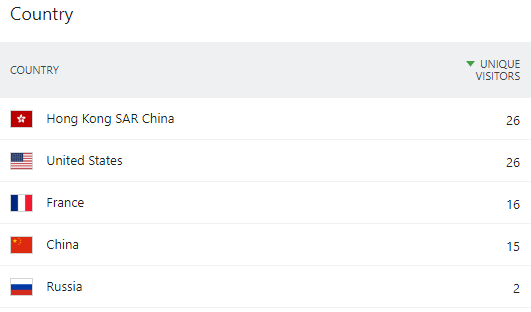

The map of origin of visits can help us correlate possible events depending on the country of origin that has recent malicious activity, for example, it can help us detect the presence of a botnet:

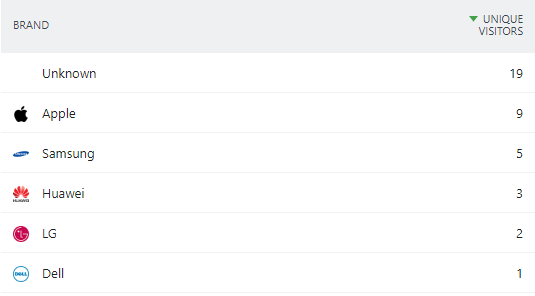

Isolating the technology used (device, operating system, browser, etc.) can also be useful in resolving an incident:

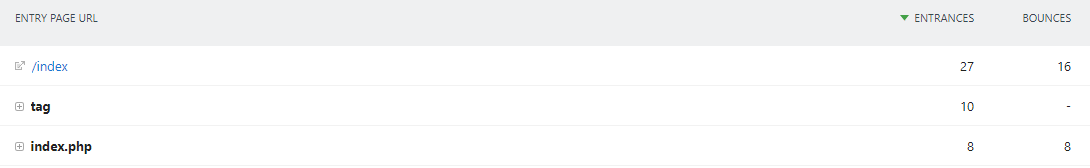

Knowing which pages are the most requested can help us identify, for example, the abuse of an API that is being exploited or the presence of an anomalous page that should not exist:

In addition to fingerprinting both at the level of the logged-in user and the anonymous visit, it provides valuable information regarding the correlation of possible visits that at first glance seem different – for example, because the user has changed IP and country of origin with a VPN – but it’s really the same person. For this purpose, persistent cookies or a simple correlation of device, operating system, browser and screen resolution can be used.

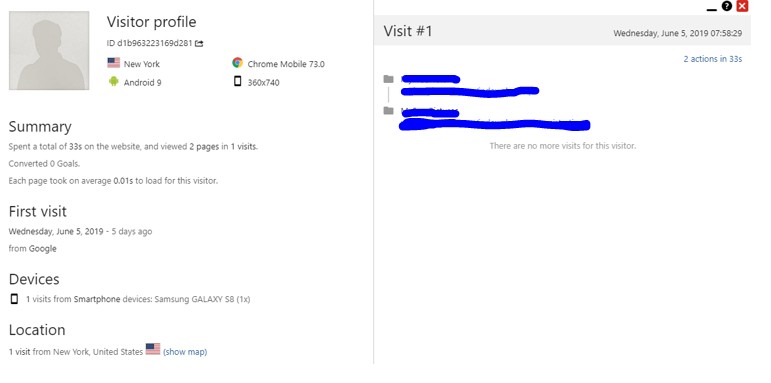

As an example, the following visit can be limted to a Samsung Galaxy S8 device, running Android 9 with Chrome 73.0, with 360 × 740 screen resolution from New York at 7:58 AM. In the case of incident management, this joint information can provide great information.

For all this, I consider it important to emphasize the importance of taking into account the tools of analysis of web visits such as Matomo, especially because we do not give our information to third parties since we host it in our own infrastructure.

Stay alert to cybersecurity news

This point is closely related to the monitoring we have discussed in the section on the updated maintenance of the application. Being up to date with the latest cybersecurity news allows us to anticipate attacks by knowing the vulnerabilities as soon as they are published, allowing us a good margin for maneuver and update planning, all generally before it is actively exploited.

It is therefore convenient to subscribe to security bulletins from those manufacturers whose technology we have installed, both at the operating system level (Red Hat, CentOS, Microsoft, etc.) and applications (Prestashop, WordPress, etc.). It is also a good idea to subscribe and frequently visit general newsletters on computer security, such as those provided by the CCN-CERT, CSIRT-CV, Hispasec one a day/a>, Generalitat Valenciana Awareness Portal o Un informático en el lado del mal (A computer scientist on the evil side), or this same blog SecurityArtWork among many others.

It is also advisable to read the reports on malware trends frequently to find out how and where the malware market is moving and to be able to anticipate potential threats, such as this one by MalwareBytes, this one by McAfee or this one by ESET.