Living beings are experts at managing risks. It’s something we have done over millions of years. It’s called, among other things, survival instinct. We wouldn’t be here if we were bad at it.

We avoid them, we mitigate them, we externalize them, we take them on.

For example, is it going to rain today? If it rains, how much is it going to rain? Do I take my umbrella? Do I stay home? Will I run into a traffic jam on the way to work? Will I be late for the meeting? Do I call to let you know? Do I try to postpone the meeting? Will I puncture a tire on the way home? When was the last time I checked the spare tire? Have I paid the insurance premium? What is the roadside assistance coverage?

All these everyday processes of risk identification and risk assessment are carried out unconsciously all the time, and we apply risk management measures without even realizing it. We grab an umbrella, call the office to inform them of a delay, attend the meeting by phone, leave home earlier or decide to take public transport. Obviously, it’s not always that easy.

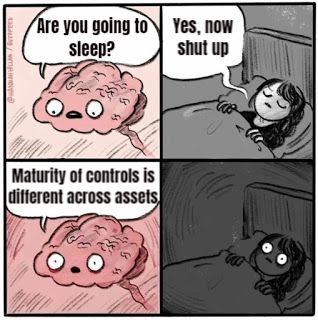

However, when we move to the corporate environment, we start with risk tolerance, probability, impact and vulnerability criteria, threat catalogs (standard), strategies, risk registers, inherent, residual and projected risk, mitigation ratios. And we get lost for months in concepts, documents and methodologies, moving further and further away from the reality we have to analyze and protect.

The orthodoxy of (cybersecurity) risk management

As a result of this, a few months ago, in the middle of the pandemic, I came across an interesting article that contrasted two very different visions of risk management, which it called RM1 vs RM2.

Basically, and quoting directly from the article, RM1 would be focused on “risk management for external stakeholders (Board, auditors, regulators, government, credit rating agencies, insurance companies and banks)“, while RM2 would be “risk management for decision makers within the company“.

A few weeks or months later, Román Ramírez published an entry in a similar vein, criticizing the prevailing orthodoxy in cybersecurity risk management and the problems it generated.

Both articles relate to a perception that I have been having for some time now, and that is the reduced value that in many cases the analysis and management of risks (cybersecurity, in this case) as we know it and as we do it today, provides. Although risk analysis and management is an essential exercise, the current approach needs a major change for its practice to have a significant impact on business decisions.

Throughout this series of posts I will analyze some of the problems I have encountered or have encountered in the past in this area, although I am afraid that there will not always be easy solutions to apply. Nor does this series attempt to establish any model, paradigm or alternative methodology, but rather to point to issues that are susceptible to improvement and that in some cases are maintained a) because they have always been done this way or b) because the theoretical literature says they should be done this way, although their application to real situations is complex and provides little value.

So, let’s get on with it.

Inherent risk

The concept of inherent risk is one of the first problems that anyone following a classical approach encounters when starting a risk analysis. According to this model, we have the following scheme:

Inherent or potential risk -> [evaluation of existing controls] -> residual risk -> [implementation of controls or improvement of existing ones] -> future or projected risk.

Although ISO 31000 (suspiciously) does not define the concept of inherent risk (and it seems that ISO 27000:2018 does not either), inherent risk is traditionally understood as the risk to which an asset is exposed in the absence of controls, i.e. prior to the risk management process.

The motivation of inherent risk is to provide the risk analysis with an initial starting point that only considers events exogenous to the asset. Once this objective basis is properly established, we should be able to measure the effect that a given security control has on risk reduction, thus making the model methodologically sound. The problem is that on paper this makes perfect sense, but its application to reality is not so simple and raises countless questions.

The problem behind the concept of control

The first of these, which borders on the metaphysical, lies in the concept of control. If we start from the ISO 27000:2018 definition of control, “a measure that modifies risk“, we can understand that some assets have, by their very nature, controls that we can consider as inherent.

For example, a data center will have an access door, a building will have cabling through the ceiling or raised floor, and an operating system will have a logical access control system, however rudimentary. However, the inherent risk approach forces us not only to ignore all these controls (because they are risk-modifying measures), but also to homogenize assets and move away from reality.

In the inherent risk world, strictly, the server with redundant power supplies, RAID5 housed in a climate-controlled datacenter with fire extinguisher measures is no more secure than the 2015 laptop that the marketing team carries back and forth for their presentations (I don’t want to anticipate future posts, but the identification and evaluation of controls at the asset level is another assumption we love to apply without thinking too much about its implications).

What is an asset without security controls?

If we manage to evade that idea of control inherent to the asset and try to mentally place ourselves in that imaginary nightmare world where no asset has any security controls in place and assets of the same nature are all equally insecure, we will realize how difficult it is to conceive of an asset without security controls.

Consider an e-commerce web server. Such a mental exercise would mean eliminating patching, backups, firewall and all perimeter network controls, operating system access control, physical security, cabling security, operating procedures and processes, vulnerability management and malware protection, passwords policies, encryption of information in transit, and so on until all controls are eliminated.

The result is frightening, although it is hard to imagine such a thing.

But we still have some decisions to make which have a direct impact on our inherent probability and impact levels. Do we locate the server on the street or in the lobby of the building? How many programming bugs do we consider to be present into the design and code (do we assume a bad developer or a good one?)? Do we assume that it complies with absolutely no legal regulations? That it is easily vulnerable to the full set of OWASP Top 10, or just some of them? That upgrades are done at any time without any planning at all?

Probability and inherent impact

At this point, if we can actually imagine such an asset, we will realize that we are already quite far away from any known reality, from which we must assess the probability and impact of a hypothetical threat materializing on an asset that is unlike anything we have in our organization.

And in doing so, we will realize that both are, in the vast majority of cases, at very high values. In fact, often at values that have no place on the scale that we will use later in the residual risk calculation. For example, in the absence of controls, more than one threat will have a daily frequency, or even higher. We will hardly have that frequency in our scale of residual probability criteria.

In any case, assume we may have been able to overlook all that has been said and, roughly speaking, to assess the probability and impact qualitatively. However, we cannot ignore the fact that we have done so assuming circumstances and conditions that make this assessment, to say the least, unreliable. Not only because of all the implications of evaluating an asset without controls, but also because we lack data or metrics to provide us with a “ground” on which to base ourselves.

And yet, that is our main entry point to the next step, the residual risk calculation.

Distance from risk managers or owners

One of the last problems with inherent risk analysis (at least, in this post), and which ties in with the “ground” I discussed in the previous point, is the inability to establish a productive conversation on the terms that inherent risk establishes.

Any IT or business person will be able to point out without too much trouble a handful of risks that concern them (whether or not there is a risk register) and will even be able to give a rough assessment of what their likelihood and impact is, in terms of natural language, economic impact, historical or expected frequency, and so on. These assessments will be more or less objective, but they are real risks.

However, if asked about risks in the absence of controls, more than one will raise an eyebrow and look suspiciously, as if to say “how do you want me to know that and what does it matter“.

The point is that the exercise of calculating inherent risk departs from the normal operation of organizations, and assumes that we can properly assess the impact and likelihood of a hypothetical situation happening on hypothetical assets which, in reality, no manager is concerned about, all to provide an false sense of objective formality in a process with a high subjective component.

The solution

The most immediate solution to this problem is to omit the calculation of inherent risk and work directly with the level of residual risk, bearing in mind that inherent and residual risk are simply two moments in time of the same analysis process. In a way, it is as if, when assessing inherent risk, we were at the beginning of time for the asset in question, and little by little we move forward seeing how controls are deployed, until we reach the present and our residual risk (which is, I insist, the one we are interested in). A bit like those videos that start millions of years ago and in fast motion bring us to the present day.

It is important to note that the inherent risk does not determine the catalog of threats to which the organization is exposed, but only the extent to which each of those risks should concern us and therefore how many controls we should implement in response. The catalog of threats must be obtained in a previous phase from the analysis of the internal and external context of the organization.

However, we cannot ignore the fact that the calculation of inherent risk, despite all the problems indicated, can in theory allow us to identify key controls implemented, or in other words, which controls are most effective in reducing risk. Although such an option exists, in a qualitative assessment process with the degree of uncertainty outlined above, it is difficult to establish to what extent such an exercise is feasible or useful.

In this sense, the FAIR methodology turns the concept on its head and defines inherent risk as “the amount of risk that exists when key controls fail”. Thus, instead of defining residual risk from inherent risk, establishes the residual risk directly by assessing the existing set of controls (here lies the -false- problem, in fact, which gives rise to the existence of the inherent risk), and makes it possible to obtain specific scenarios of “partial” inherent risk in which one or more controls fail. Without knowing the methodology in depth or having applied it in practice, it is difficult for me to say to what extent this approach is valid, but it sounds good.

But I want my inherent risk

Having said all this, it may be the case that we need to calculate the inherent risk, due to audit requirements, internal methodology of the organization, demand from the risk department, consistency with previous analyses, etc.

In such a case, we have two main options.

If we do not need to link inherent and residual risk explicitly, we can make an initial inherent risk assessment, another deriving the residual risk, and describe at a high level how we arrive at the residual risk from the inherent. It’s not the best option, but in reality what we don’t have to lose sight of is that what we want to have under control is residual risk.

If we need to link the two explicitly, we can do so by means of mitigation coefficients associated with maturity levels of the controls implemented, preferably mapped onto a standard. That is, we calculate the residual risk applying percentages of probability reduction, impact or both, to each risk, which are derived from the maturity level we have established for each control. This, as we will see in another post, introduces an additional variability factor, but it is one of the feasible alternatives.

In this case, to reduce the impact of the uncertainty introduced by the inherent risk, and taking into account that in almost all threats the inherent impact and probability will be high, we will unload the process of obtaining the residual risk on the coefficients derived from the maturity of the controls.

So far, the first entry of the series, in which we have analyzed the problems behind the concept of inherent risk. In future posts we will look at other aspects that distance the risk analysis and management exercise itself from the reality we want to analyze and protect.