ChatGPT digital tool is well known at this point. This artificial intelligence (AI) is having a huge impact on the information and communication age. ChatGPT is being used for different purposes to improve some systems, however, some of the applications for which it is being used are generating controversy, and therefore, one more reason why it is being echoed.

If you still don’t know ChatGPT, you should know that it is a tool developed by OpenAI specialised in dialogue. It is a chatbot. In other words, you enter a text input and ChatGPT generates a coherent text that responds to what you have written.

Well, ChatGPT can also be used in health. But what do we mean by “in health”? “In health” means that it can be applied in any area that affects people’s wellbeing, whether it is to develop new software to improve the health management of a hospital or to ask questions about our welfare from home.

Several projects have been developed using AI with focus on health. Some of them implement the same ChatGPT models and others are based on proprietary technology, all of them taking into account the communication with the patient.

Triage of AI patients

Triage is a process that sorts and classifies patients according to type of urgency, which is essential when demand and clinical needs exceed resources. There are several projects where AI is used for efficient triage in health centres and hospitals.

One such example is the React Project at the Hospital Universitario Virgen del Rocío, where an advanced optimisation algorithm and hospital information systems take into account factors such as frequentation, variability, distribution of hours and severity levels. In this way, resources, work shifts and waiting time management are organised.

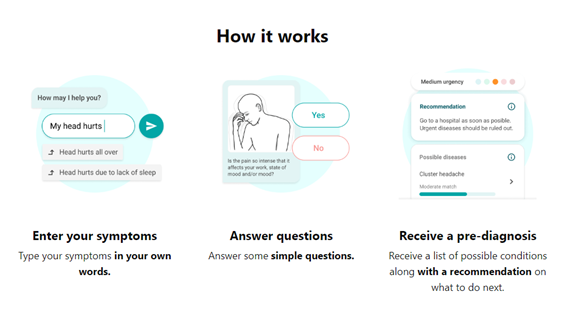

Another example is the company Mediktor, founded in Barcelona in 2011. The company has developed a triage and pre-diagnosis tool using artificial intelligence that is being implemented in more and more healthcare systems such as insurance companies and hospitals, among others.

Predicting dementia and Alzheimer’s with GPT-3

Another example of ia implementation in this field is the study conducted by researchers at Drexel University where they demonstrated that OpenAI’s GPT-3 programme can identify spontaneous speech cues with 80% accuracy in predicting the early stages of dementia and Alzheimer’s disease. This study demonstrates that there is great potential to develop artificial intelligence-driven tools for early diagnosis of dementia and to provide personalised interventions tailored directly to individual needs.

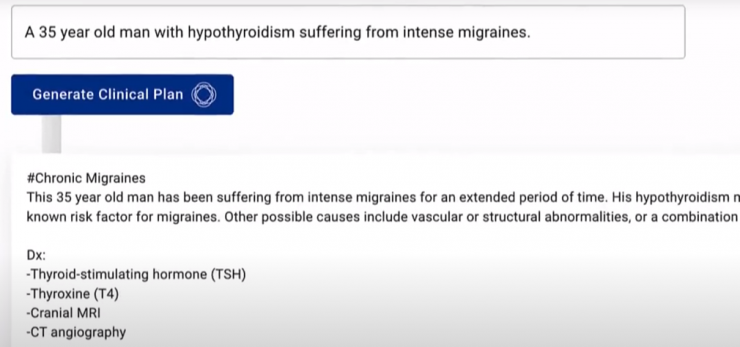

Clinical plan generation

The latest project presented is Glass AI, a tool under development for the glass platform that uses AI to produce a diagnosis or clinical plan from a text input about a patient’s clinical problem. This tool is not intended for the general public but for doctors and healthcare staff trained to interpret the output as it may be incomplete, incorrect or biased. It would therefore be a very useful support tool for qualified clinical staff.

ChatGPT in our lives

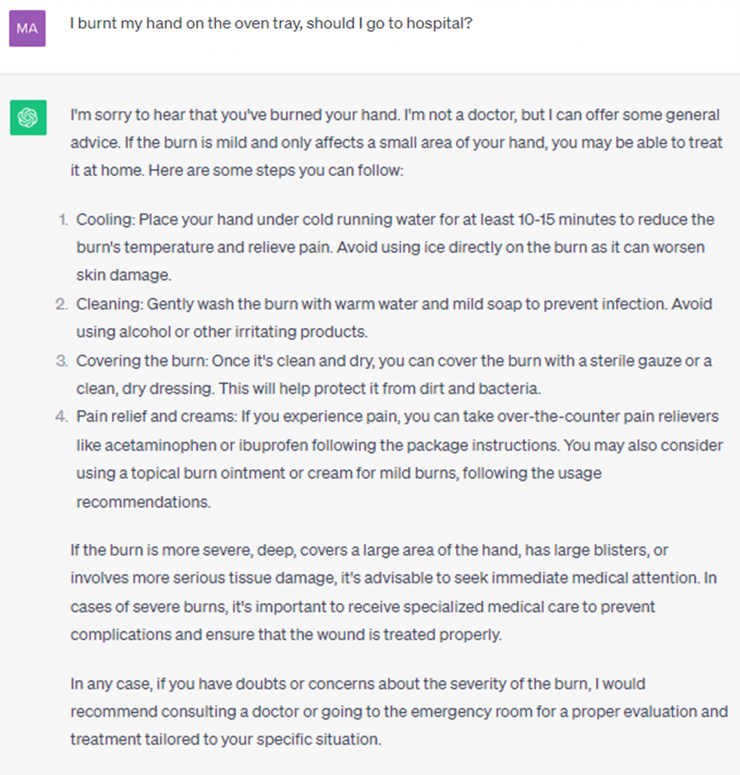

The reality is that ChatGPT is already part of our lives almost as an indispensable tool. So how can it help us today to make health decisions in our day-to-day lives? Because ChatGPT uses deep learning to interact with patients and provide them with personalised health information, we can ask questions about our wellbeing or even symptoms we are suffering from.

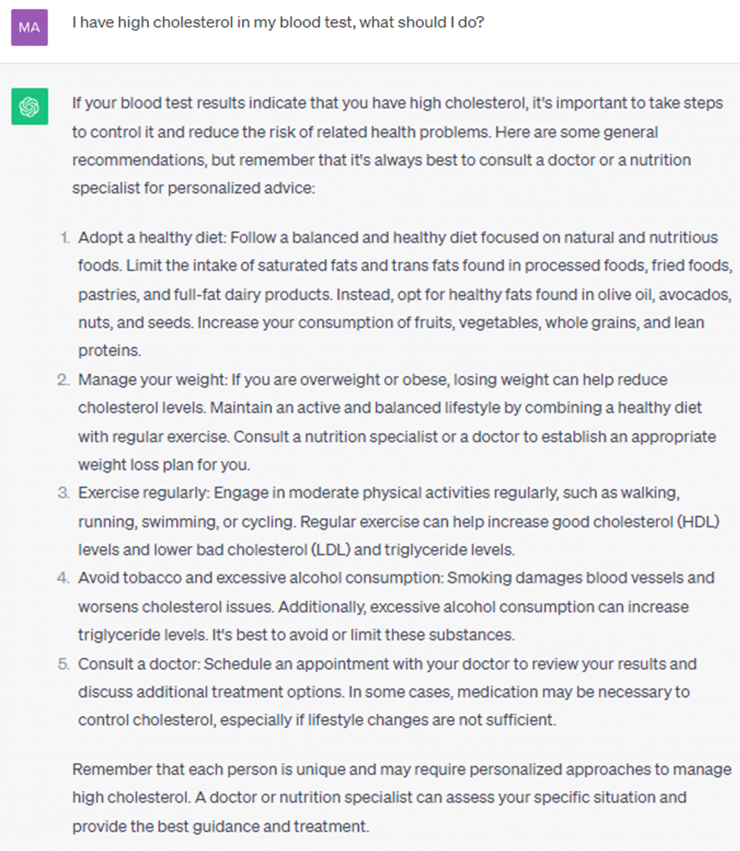

This technology has great potential to improve the efficiency and quality of healthcare. We have made some examples to show how ChatGPT can inform us about what to do in certain situations. The advantages of ChatGPT are the speed of response and the fact that it is not necessary to travel to a health centre to get an answer. A great help for the health system to decongest its services and to be able to offer good clinical care.

In the first case, we tell them that we have burnt our hand on the oven tray and whether we need to go to hospital. And one of the first things ChatGPT tells us is to assess the burn, i.e. whether it is minor or severe. If it is severe, it advises us to seek medical attention as soon as possible. However, if it is minor, it gives us several tips on how to reduce the pain and heal the burn correctly. In addition, ChatGPT always emphasises that the figure of the health worker is the one who can give you the best advice.

In this second case, we tell him that the results of a blood test have shown high cholesterol and we ask him how we can reduce it. ChatGPT offers general measures to control cholesterol and information on the right diet and exercise to maintain good health, but again emphasising the role of the doctor.

These examples demonstrate how ChatGPT can be used to provide personalised, accurate and detailed information to patients on health and disease issues, which can improve patient understanding and ultimately improve the quality and efficiency of healthcare, avoiding the overcrowding of healthcare facilities.

ChatGPT in healthcare cybersecurity

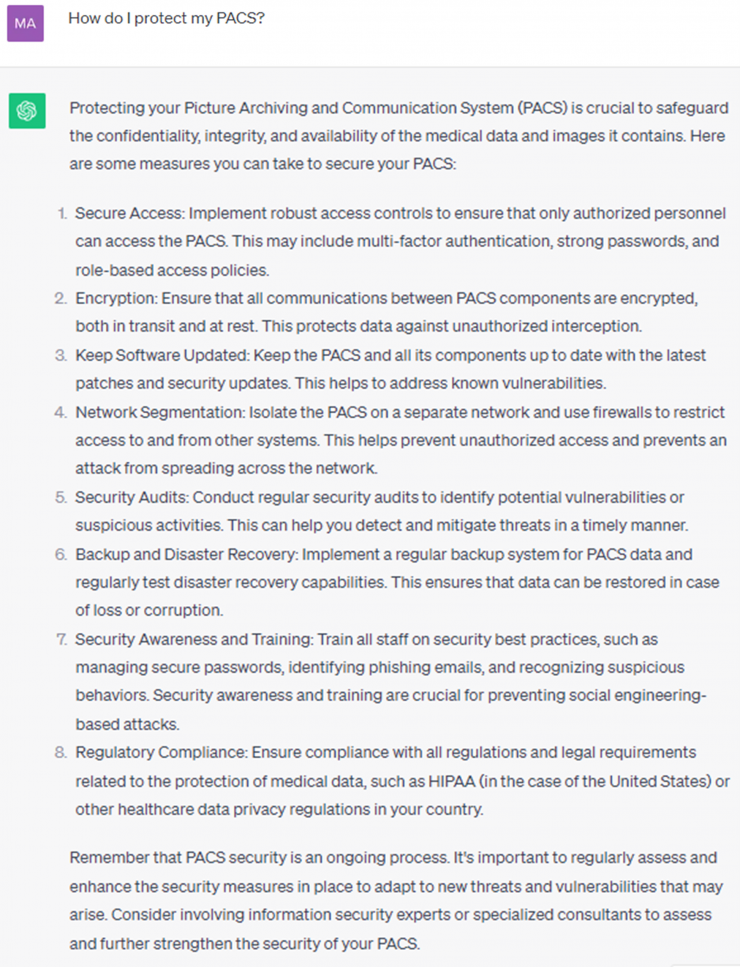

Another of the multiple applications that ChatGPT can have is in the field of cybersecurity. In this article, we are going to focus on cybersecurity applied to the healthcare sector.

Let’s say, for example, that an organisation with a PACS (Picture Archiving and Communication System) does not have any security measures in place, but wants to start protecting its information. As the company does not yet have any knowledge of cybersecurity in this area, you could start by asking ChatGPT what you should do to protect a PACS. The answer generated by the tool provides general recommendations on how to secure it.

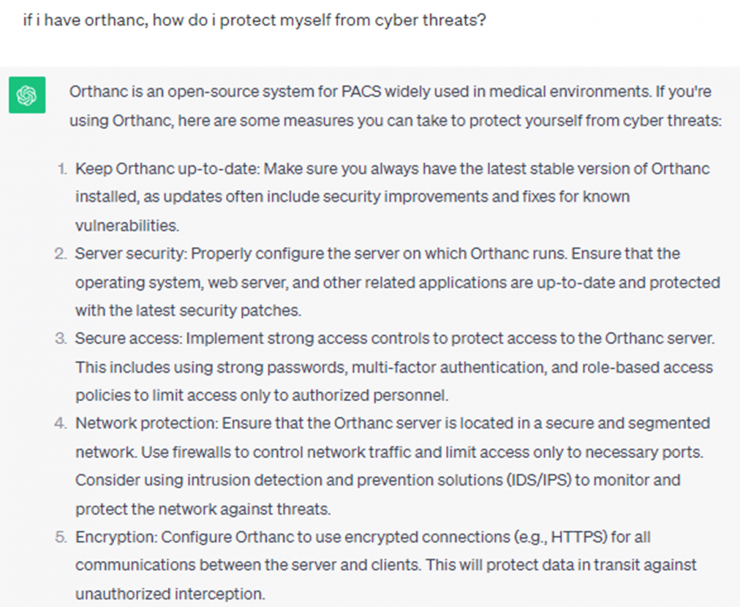

In our case we use Orthanc, an open source tool. As we want more specific measures, we asked him to be more specific with our software and how to protect it.

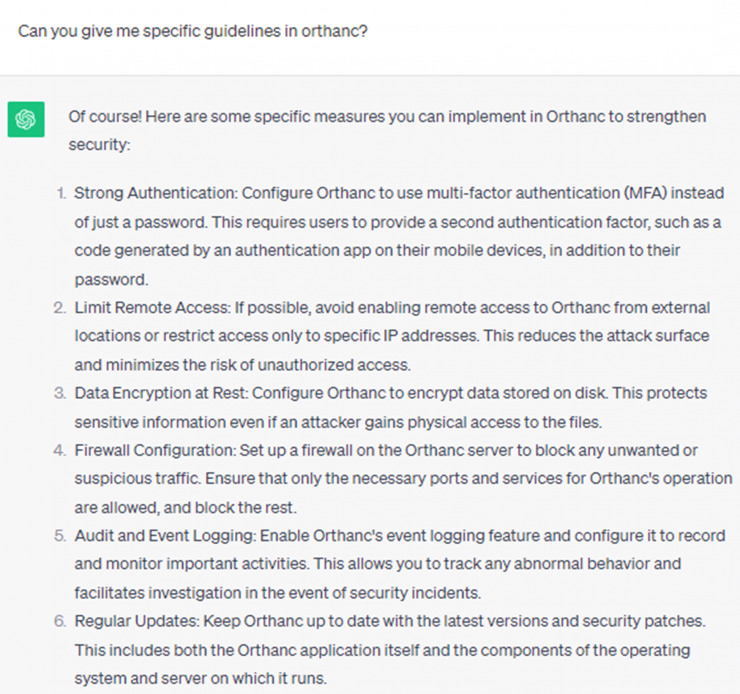

ChatGPT answers us in a general way again, but we are looking for more concrete guidelines. So we ask again.

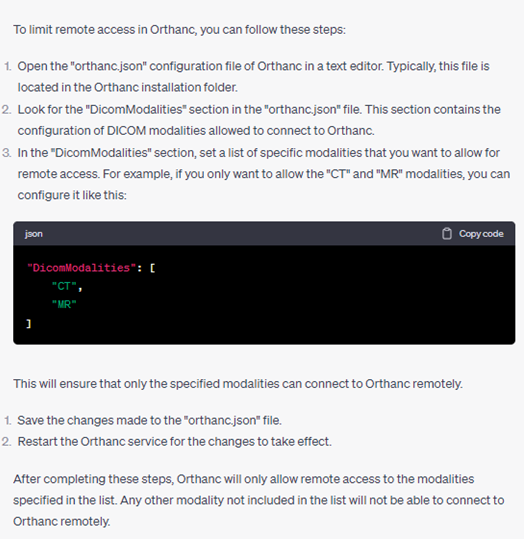

One of the measures that catches our attention is to limit remote access. We go deeper into how to do this by asking ChatGPT the procedure for this. And he answers us how to limit the access of the different modalities (medical imaging machines).

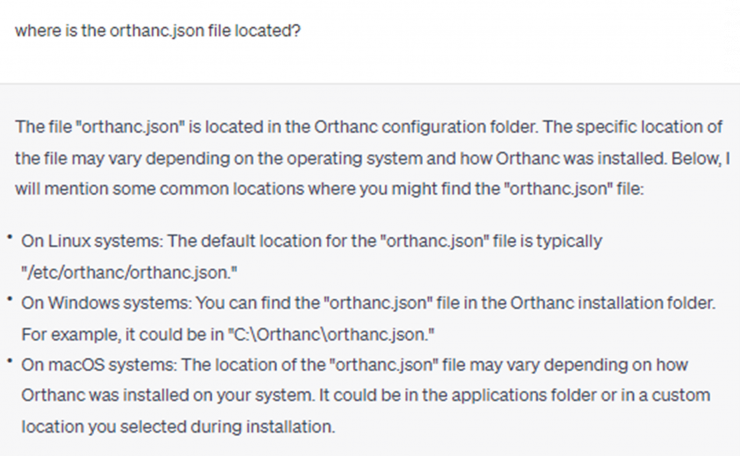

We check that these are indeed the right steps, by putting them into practice in our Orthanc. To do this, we follow the instructions provided by ChatGPT, by first opening the configuration file “orthanc.json” and going to the “DicomModalities” section. So we go for that file when we realise the first obstacle. We don’t know where this file is located, so we ask ChatGPT.

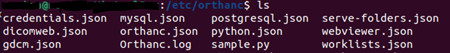

In our case we use Linux, so we looked to see if it is indeed in the path “/etc/orthanc/orthanc.json”. And, yes, it is!

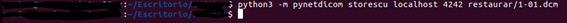

Before modifying the file, we check if a ultrasound (US, Ultrasound) medical image we have is sent correctly. The way to check this is that no error is displayed.

Now we are going to edit the file and restart the Orthanc service, as previously indicated by ChatGPT. We add the fragment mentioned in point 3, allowing only the sending of CT and MRI images, so the image we sent previously should not be accepted.

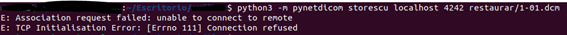

Next, we launch the same command as before with the ultrasound scan and we see that this connection is rejected. This limits the remote connection to only the modalities that we want, in this case CT and MRI scans.

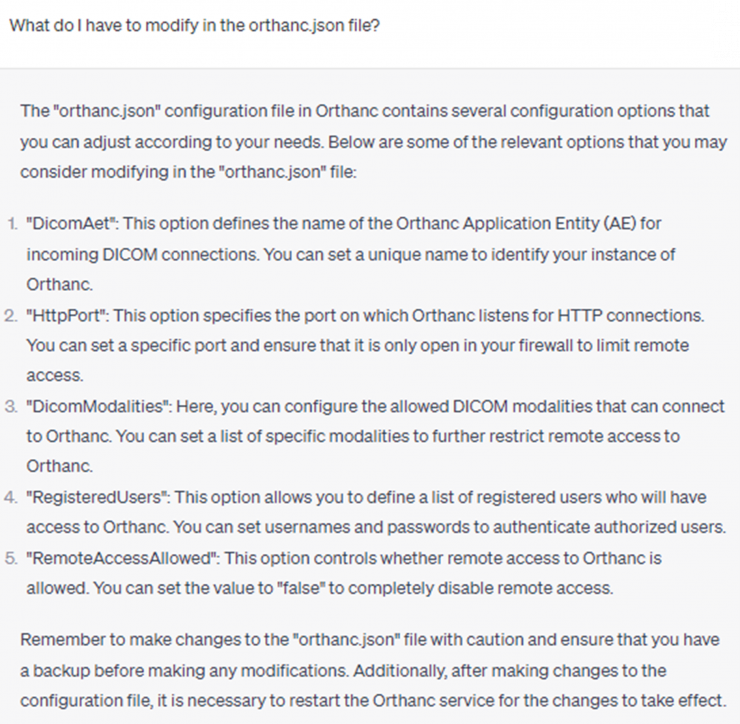

On the other hand, you can ask what other actions can be performed to limit access via the “orthanc.json” file. It will list several of the entries that can be modified and what each of them is for.

It should be considered that ChatGPT instructions are not always correct and may contain bugs or be subject to old versions and changed procedures, but it is still a very fast help tool. It is also important to be aware of the data that is sent to ChatGPT and to ensure that it is not sensitive or confidential information.

CHATGPT CONTROVERSY

We have seen the applications of ChatGPT in health and the advantages it offers. But it is normal to doubt whether these technologies will replace people in the future. A recent news item claims that ChatGPT’s answers to medical questions are more accurate than those of doctors, but to what extent is this true or will it continue to be true? We should not forget that ChatGPT is still a learning model, i.e. it has learned to tell what the correct answers are and has more knowledge capacity than a person. Moreover, currently, the information collected by ChatGPT is up to date until September 2021, so this information may become obsolete over time if it is not updated. However, and most important, ChatGPT is not human. The model lacks ethical guidance and clinical judgement. It lacks empathy, and people often prefer human contact when dealing with their problems.

For these reasons, ChatGPT is a very useful tool but it should be used as a support tool and not as a replacement. In other words, a tool that helps healthcare staff to manage repetitive tasks or to make a first approximation in the absence of a professional review.

CONCLUSION

In conclusion, ChatGPT is a promising technology that has the potential to significantly improve the efficiency and quality of healthcare, especially in health promotion and disease prevention, as well as health education. As the technology continues to evolve, we are likely to see further integration of ChatGPT in this field in the future, but always as a support tool and not as a replacement for our healthcare professionals.

REFERENCES

- https://www.rocheplus.es/innovacion/investigacion-ciencia/ChatGPT.html

- https://journals.plos.org/digitalhealth/article?id=10.1371/journal.pdig.0000168

- https://www.mediktor.com/es

- https://www.clinicbarcelona.org/noticias/el-hospital-clinic-firma-un-acuerdo-con-mediktor-para-validar-su-aplicacion-que-permite-desarrollar-un-triaje-mas-eficiente

- https://gpt3demo.com/apps/glass-ai-health

- HealthITAnalytics: “Adventist Health Leverages AI-Powered Chatbot for Diabetes Care”: https://healthitanalytics.com/news/adventist-health-leverages-ai-powered-chatbot-for-diabetes-care

- https://www.mdpi.com/2227-9032/11/6/887

- https://www.insider.com/chatgpt-passes-medical-exam-diagnoses-rare-condition-2023-4